Knowledge graphs have turned out to be very useful in a surprising number of contexts. First-generation knowledge graphs proved to be a wonderfully efficient and portable way to encode human knowledge around concepts, entities, and relationships between them.

For example, how do you codify everything you know about a customer, a promising new drug, or anything else important? In times past, we’d encode our knowledge through writing, and put that writing in collections, such as libraries or notebooks. However, getting any use out of any knowledge there will demand a person of sufficient intelligence to read it, interpret it, and put it to work.

Obviously, moving from data to knowledge, and insight to action is difficult to do this way. It isn’t agile, for one thing. One way of measuring agility is by simply asking a question – if a new important fact becomes known, how quickly can it be put to work everywhere it is useful?

Ideally, the answer should be “immediately!” That’s one way of describing data agility: being able to make simple, powerful changes that immediately change the way data is interpreted and acted upon.

First-generation knowledge graphs proved to be good as an organized metadata structure that codifies human knowledge. Metadata is “data about data” – the emphatic word here being ‘about.’ Metadata captures concepts, entities, and relationships. At a high level, we want to encode what we know about simple things, leading to more complex and nuanced interpretations. We do this because we want very strong foundations; e.g., we want to make sure we really know what we know.

First-generation knowledge graphs proved to be good as an organized metadata structure that codifies human knowledge. Metadata is “data about data” – the emphatic word here being ‘about.’ Metadata captures concepts, entities, and relationships. At a high level, we want to encode what we know about simple things, leading to more complex and nuanced interpretations. We do this because we want very strong foundations; e.g., we want to make sure we really know what we know.

Initially, it seems quite simple to just represent things like {“John”, “is married to”, “Sue”} or something similar. But then you realize it’s a bit more complicated, depending on how the information is going to be used. For example, what do we mean by “is married to”? Do legal separations, common law, and polygamy count? Be precise.

Obviously, at some point, you want to define the term “is married to”, and then you will want to define the terms you used there, perhaps like “legally married” (by what authority?) or even what assumptions you can safely make about a name like “John” or “Sue”.

So precise meanings of terms, concepts, entities, and relationships quickly become important – if a client is married to someone else, what does that mean in context? Not all facts have the same meaning or interpretations to everyone, and that’s by design. There may be a marketing interpretation, a legal department interpretation, maybe even an HR interpretation that might be relevant. John and Sue are married; what does that mean to me, in my context?

By now, you start to appreciate that you’re entering a universe of increasingly and potentially actionable definitions, capable of aiding the many interpretations of the same facts depending on who or what is doing the interpretation and why.

Things lead to other things – where does it all stop? In reality, it never stops, as we’re always learning new things about facts and what they mean. And, as the world turns, business invents, adapts, and changes – so facts and meanings do too. That’s the whole idea, really.

Things lead to other things – where does it all stop? In reality, it never stops, as we’re always learning new things about facts and what they mean. And, as the world turns, business invents, adapts, and changes – so facts and meanings do too. That’s the whole idea, really.

At some point, the knowledge graph is rich enough to be useful to others to help them better interpret statements like “Fred is married to Jane” or similar. The good news? As we refine and improve our graph of knowledge, it will be uniformly and relevantly interpreted everywhere.

The bad news is that with first-generation knowledge graphs, we haven’t connected what we know about something with the thing itself. So knowledge graphs are not (yet) immediate, agile, and nimble effectors of getting data to respond to business change.

Knowledge Graphs Are Cool

As one who appreciates powerful new technologies, I can safely say that, yes, knowledge graphs are most definitely worth your attention – and as a result, a subset of the startup world is now navigating in that direction. This makes complete and obvious sense to me.

Yet a knowledge graph – a metadata structure sitting on a machine somewhere – has very interesting potential, but can’t do very much by itself. How do we put it to work?

It should not be surprising that not all knowledge graphs are created equally. Yes, there is much inward-focused technology discussion (e.g., who makes the best graph database, and why?). This usually happens early in every new technology cycle.

But new technologies are new tools, so it’s fair to ask – how will they be used? If the purpose of a knowledge graph is to create shared, reusable organizational knowledge with the goal of being able to make simple, powerful changes that immediately change the way data is interpreted and acted upon – then we need more than first-generation capabilities.

You’ll Want a Knowledge Graph, and…

You’ll want to tightly couple it with your data – to make a machine-addressable resource from a single data platform.

There are intimate and powerful relationships at work here:

- between knowledge and how data is interpreted in context to its state, use, and audience

- between knowledge and the data that may have created it (I’m thinking about the outcomes of machine learning here)

- between the human expertise that makes conceptual leaps to create new knowledge by interpreting and using data

So, keeping data and what we know about it together at all times is a very powerful idea, as it means that anywhere data is consumed, it will be consumed in the context of everything we know about it at that point in time. Or any previous point in time, if needed.

So, keeping data and what we know about it together at all times is a very powerful idea, as it means that anywhere data is consumed, it will be consumed in the context of everything we know about it at that point in time. Or any previous point in time, if needed.

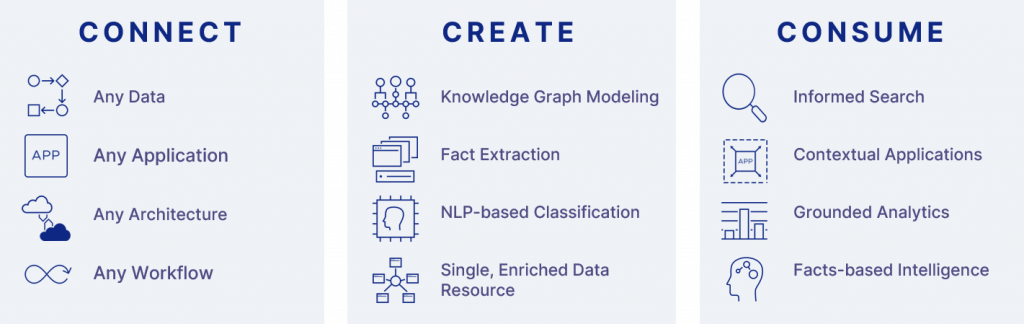

This could be useful when ingesting any new data – and by data we mean in any form, so traditional columnar data, emails from clients, research publications, etc. Something new is coming in, how do we calibrate it against grounded facts, and decide to take action or not? We have to connect the new data point with everything else we know.

There’s also value in creating new knowledge, by discovering new relationships and new meanings alongside existing ones, whether that’s done by a centralized team, skilled users, by an AI machine, or any combination.

The joining of meaning (aka semantics) with data (aka facts) leads to the next-generation knowledge graph — a semantic knowledge graph.

The semantic knowledge graph is one where existing real-world data and new synthesized data is tightly with its meaning. This new synthesized data is created using semantic AI applied over sources of record for any text asset such as a contract, report, or email. A subsequent blog will discuss this in more detail – stay tuned.

By having one place to go for data and everything you know about that data, the semantic knowledge graph becomes a high-value, investable resource. It makes everyone who uses it that much smarter, as any data item comes equipped with its own ‘backpack’ containing its place in the data universe, what you know about that data, and its definitions.

And, best of all, when that data is consumed in context, it’s much more valuable and actionable. That could be through search informed by everything the organization knows already. Or an application that could bring that organizational knowledge to bear at the point of decision. Or analytics that could be both grounded and filtered through everything else that is known, and how it is known. Or by analytics driven primarily by metadata – often called facts-based intelligence, object-based intelligence, or activity-based intelligence.

Powerful stuff, indeed.

Knowledge Graphs and Data Agility

As before, I shared that I thought the best way to view a knowledge graph is as a shared artifact around which you build processes. Those processes are ideally agile, hence data agility.

Yes, there is a decision about what tech to use to build the thing, but if you don’t insist on seeing it as a process platform that delivers data agility, history has shown your odds are very poor indeed.

There seems to be an endemic pattern that must be overcome to make any progress, and it turns out to be a very intractable one. Large organizations make big investments in technology stacks, processes, etc. Clearly, sources and consumers of data must be easily able to be integrated.

But at some point, a line is crossed. In this anti-pattern, the platform itself is envisioned as being composed of various hand-integrated components. All that needs to be done is a little integration work, which shouldn’t be too hard, right?

But any integration takes time, and that in and of itself destroys data agility. Good efforts by really smart people with plenty of resources hit an entropy wall before too long, and the approach is eventually abandoned, with lessons learned. There are literally hundreds and hundreds of examples I’ve found of this, in every industry and at every scale. Many of them are now MarkLogic customers.

This ‘integration-centric’ way of working is an architectural problem that – unless solved – will eventually fail due to its brittleness to change, caused by an absence of a strong agile foundation.

The Good News

Many organizations have an important and growing investment in capturing human knowledge in the form of knowledge graphs, or something similar like an ontology or data dictionary. That’s good on several levels – the importance is understood, smart people are working on it, there are many reusable artifacts that can move forward, and so on.

What’s missing is a platform that delivers data agility and can be used to build new processes that connect new facts with existing ones, create new knowledge, and enable people to consume data along with everything we know about it: search, applications, analytics.

Outgrowing the current platform and moving to a new approach is never an easy or simple decision, but evidence shows it must be done, as I haven’t found anyone that has had any meaningful success by trying to build an agile platform out of piece parts.

Yes, there will be gnashing of teeth and rending of garments, but – when all that is done – most involved will agree that forward progress on responding to business change has stopped, and a new approach must be found that enables a truly nimble response.

And, if this sounds like you, we’d love to talk to you about it.

Learn More

Download our white paper, Data Agility with a Semantic Knowledge Graph

Jeremy Bentley

Jeremy Bentley is the founder of Semaphore, creators of the Semaphore semantic AI platform, and joined MarkLogic as part of the Semaphore acquisition. He is an engineer by training and has spent much of his career solving enterprise information management problems. His clients are innovators who build new products using Semaphore’s modeling, auto-classification, text analytics, and visualization capabilities. They are in many industries, including banking and finance, publishing, oil and gas, government, and life sciences, and have in common a dependence on their information assets and a need to monetize, manage, and unify them. Prior to Semaphore Jeremy was Managing Director of Microbank Software, a US New York based Fintech firm, acquired by Sungard Data Systems. Jeremy has a BSc with honors in mechanical engineering from Edinburgh University.

Comments

Topics

- Application Development

- Mobility

- Digital Experience

- Company and Community

- Data Platform

- Secure File Transfer

- Infrastructure Management

Sitefinity Training and Certification Now Available.

Let our experts teach you how to use Sitefinity's best-in-class features to deliver compelling digital experiences.

Learn MoreMore From Progress

Latest Stories

in Your Inbox

Subscribe to get all the news, info and tutorials you need to build better business apps and sites

Progress collects the Personal Information set out in our Privacy Policy and the Supplemental Privacy notice for residents of California and other US States and uses it for the purposes stated in that policy.

You can also ask us not to share your Personal Information to third parties here: Do Not Sell or Share My Info

We see that you have already chosen to receive marketing materials from us. If you wish to change this at any time you may do so by clicking here.

Thank you for your continued interest in Progress. Based on either your previous activity on our websites or our ongoing relationship, we will keep you updated on our products, solutions, services, company news and events. If you decide that you want to be removed from our mailing lists at any time, you can change your contact preferences by clicking here.