The Future Is Already Here — It’s Just Not Very Evenly Distributed

If you’re a William Gibson fan, you’ll recognize the quote. This speculative fiction writer uncannily predicted many aspects of modern society decades ago — simply by observing existing trends and extrapolating on them, creating great stories in the process.

If you’re a William Gibson fan, you’ll recognize the quote. This speculative fiction writer uncannily predicted many aspects of modern society decades ago — simply by observing existing trends and extrapolating on them, creating great stories in the process.

The same can be said of modern, information-savvy organizations. The data visionaries among them have an interesting way of anticipating the things to come.

Making Connections

While isolated data sources can be interesting and useful, their value increases exponentially when well-connected, and made easy to be consumed and acted on. The rationale for a data platform is simple when seen through this lens: one place where many forms of data can be ingested, connected, made easy to consume, and acted upon.

Ask the people who have built and deployed these platforms, and one word always comes up: agility. Those that have it value it greatly, those that don’t say they want it badly.

Much the way many manufacturing organizations care about an agile supply chain to support their core business, information-centric organizations are starting to think much in the same way about their data supply chain.

Data agility here is multi-dimensional, it’s the ability to change any aspect — as quickly as is needed — anywhere along the data supply chain, and have things change immediately. In this context, it means being able to easily use new data sources, create new connections, change meanings, expose things in new ways — and have those changes immediately propagate throughout, where they can be immediately acted upon.

Hence the term “data agility”.

There is a long history of integrative data platforms to consider through that lens. From early data warehouses to modern data lakes, there’s been no shortage of investments. Unfortunately, there is a consensus that most have come up short in delivering what was originally intended.

There is a long history of integrative data platforms to consider through that lens. From early data warehouses to modern data lakes, there’s been no shortage of investments. Unfortunately, there is a consensus that most have come up short in delivering what was originally intended.

But there are interesting exceptions. The visionaries seem to have cracked the code in three important regards.

A Visionary Pattern For Success?

Advanced practitioners tend to frame problems differently, thanks to practical experience regarding what worked, and what didn’t. They (and I) consider these hard-won, valuable lessons.

It starts with an acknowledgment that data can only be valued in rich context — e.g. when connected with everything else that is known. The fact that a “customer bought a product” is maximally useful when you can calibrate that factoid against a grounded reality of what is already known about customers and products.

From a knowledge worker perspective, this can be powerful stuff. Click on any entity — maybe a name, a molecule feature, anything of interest — and show me more. Easily explore broader and deeper connections, see how this bit of data sits in the overall organizational flow, where else is it used, etc. This ability to easily navigate information, its meaning, and its connections becomes a huge organizational knowledge amplifier.

The typical practice of sourcing and serving raw data up in bulk and lightly-filtered bulk form only succeeds in moving the heavy lifting of making powerful connections — and building shared understanding — onto the unfortunate end users. Not only is this very inefficient, it makes it very hard to get to anything approaching a shared, actionable “truth” for all.

Context matters. So how do you build a shared context for data? The visionaries will point to three key attributes.

Active Data, Active Metadata — And Active Meaning

The metadata management world exists because there’s a need to provide that reusable, shared context — organizational knowledge — by detailing the definitions, connections, and relationships between different entities and their data elements.

The metadata management world exists because there’s a need to provide that reusable, shared context — organizational knowledge — by detailing the definitions, connections, and relationships between different entities and their data elements.

But, as typically practiced, it’s a world unto itself, as the metadata labels and their connections are decoupled from source data. It’s useful, but only to a point. There are visionaries who would argue that metadata management should not be a separate function, distinct from data management.

We don’t separate packages and their labels in the physical world, why would we do this in the digital one? For the same reason: it’s hard to do anything useful with packages (or data) without proper labels.

Change the label (or the interpretation of a label) of a package mid-flight, and you will change where it’s going. Do the same thing with your data, you’ll change how it is used — perhaps a new connection, a new lens, a new security policy, etc. Immediately, if needed.

Active data demands active metadata as well.

This is reflected in the choice of platform — specifically, data and metadata are kept together. Put differently, source data, its labels, and the metadata connections that have been established (e.g. views, knowledge graphs) are seen as one, integrative data layer, and not built separately. We now have a world where data comes with its meaning attached, its role described, and its position, relative to all other data, both mapped and marked. Packages and labels are kept together.

And — ideally it is the one place to go — an active, concurrent platform: ingesting new forms of data while metadata is actively created and connected, with multiple downstream users running complex queries, applications, feeding analytics, and so on. All are connected to a single, organizational knowledge base, which they lens as needed for the task at hand.

Active data requires active metadata. But how we interpret information in context — determining meaning — can also change dynamically. Hence the need for active meaning,

Meaning Always Matters

Returning to our practitioners, there’s a recognition that intent and meaning matters everywhere to everyone, and that meaning itself can change. Indeed, a key aspect of human intelligence is making sense of what is put in front of you right now, especially if it’s something complex like a report or perhaps a crisis of some sort. Perhaps there’s a need to now evaluate old knowledge in a new light. How can such a platform help?

Enter the world of semantics — the science of meaning — and the newer AI that augments human beings for this important task of discerning meaning and intent.

Enter the world of semantics — the science of meaning — and the newer AI that augments human beings for this important task of discerning meaning and intent.

The need to understand meaning is literally everywhere in the data supply chain: from initial interpretation of incoming data, to assisting in creating new connections between data, and — ultimately — helping end-users and their applications better interpret the results.

But — like everything else — while there is shared and reusable understanding — there is also very domain-specific understanding within functions, e.g. tax law within a large enterprise, manufacturing logistics, human resource optimization, drug research, fraud investigations, and so on.

A new context — a new rule, a new insight, a new problem — may mean that many organizational users must change their context as well, and do so as quickly as possible. This becomes a key aspect of data agility.

The Desirous Network Effect

We’re all aware of products and services that increase in value the more people use them, learning more in the process, with social media being a prime example. Even infrequent users of, say, Facebook will get an enriched experience simply by inheriting shared attributes from their personal network and their interests.

The same network effect is wanted when working with organizational knowledge — the more engagement, the better. Like social media platforms, value creation can be surprisingly exponential. One thing inevitably leads to another, and then the initiative starts to enter new territory.

Visionaries will state that adoption is gated by agility. Non-agile responses are less interesting to users than agile ones. For them agility — data agility — is their comparative advantage over all other alternatives.

It’s easy to see why agility matters: someone has a problem, and gets a good answer much faster than they expected. More people bring more problems. They get good answers, faster than expected. Who doesn’t want a fast answer to a hard problem?

Soon, data agility gains a reputation for faster, cheaper, better quality results, with a variety of ancillary tools tied in, depending on what people are doing. More people take notice, bring their data, bring their problems – and bring their organizational knowledge.

Over time, new applications are built on the new system of record. These applications are much different than earlier ones, in that they can bring the full organizational context to bear on the task at hand.

It can be a virtuous cycle that delivers unexpected, outsized value when it happens. And it happens frequently.

There Is A Pattern Here

We see their successes as building organizational intelligence: investing in knowledge capital and the ability to put it to work. The mind and body are not disconnected, and can do amazing things as a result.

The stories people tell will often speak about how one thing led to another. New value was quickly built on old. They were getting smarter on a lot of important things, and rather quickly. Nor do they see this as a finite effort — it’s continuous organizational learning, driven by data agility.

It’s now more clearly understood that agility directly impacts not only how much your organization can learn, but also how quickly it can be learned. This is persistent, reusable knowledge that is never forgotten, and always stands ready for the next problem to solve.

And Anti-Patterns As Well

There are many demonstrably poor choices to consider when discussing this topic. They all seem to fall into the same trap: by attempting to integrate disparate functionality, the result is brittle and thus not agile. Lack of agility doesn’t solve the problem at hand, so the effort is essentially over before it ever gets started. Multiple attempts tend to produce results that show the problem in different ways, but are ultimately unsatisfactory.

For example, there is wide recognition that data quality matters, but what is the best way to do it? A consistent, active, and actionable shared metadata view, or perhaps an unwieldy collection of ad-hoc tools and rules? The latter is most definitely not agile.

There is also debate about where best to improve the quality of data. The answer — through this lens — is that it is a continual, dynamic, and actionable process everywhere. It is relevant during ingestion, during categorization and evaluation, and shows up yet again during data consumption.

Taking a lesson from manufacturing, data quality is needed everywhere — and in different ways — all along the line. Agility matters here as well.

Other related themes of introducing unwanted complexity and thus inhibiting agility include: attempting to integrate multiple databases and tools, attempting to create a separate metadata layer, trying to force-fit complex data into an analytical platform, gluing stuff together on AWS or similar.

It’s all great stuff and well-intentioned — but agile it is not. It also should be mentioned that the classic IT project methodology is not helpful here. Why? It isn’t agile either.

The visionaries will say that they tried some of these — and others — and will say that they better understood the nature of the problem as a result. Ultimately, they had to overcome organizational thinking to take the next step. Dividing the problem into pieces and attempting to integrate core functionality turned out to be an anti-pattern.

The notion of a “data fabric” — as popularized by Gartner — is not inherently an anti-pattern here. As with any high-level architecture, one should be respectful of where the boundaries are, and what the required integration points will be. When it comes to integration, less is more.

Back To Active Metadata

A more promising thread in the analyst world is the notion of active metadata, that the labels describing data — and their meaning — can change how data is acted upon – in real-time, if needed. Clearly, this makes a lot of sense, but how?

Standard acceptance that metadata and data remain separated (and thought of distinctly from source data) limits data agility each and every time. At a high level, it’s much harder to learn and act when the two functions are thought of separately.

Outcomes are better when mind and body are working together. To simplify: active metadata should be all about delivering data agility.

Visionaries Everywhere?

Our best customers fit a profile: we often find them in large enterprise IT settings with very big problems that need solving. They do not accept the status quo, and are willing to lead others in what they believe is the right direction. We are rarely their first choice, often brought in over considerable objections after multiple efforts have failed to deliver.

Their stories are compelling: big problems, many failed attempts, a new approach was tried, it showed results faster than anything else, enthusiasm grew, external stakeholder requirements were met, obstacles were overcome, things got started and truly exceptional outcomes were the result — and continue to deliver:

- A tech pubs project that started as simply organizing content is now a paid search portal

- An aerospace manufacturer now has a “single truth” regarding how aircraft are built and tested

- A large government agency is using a single platform to consolidate and modernize over 60 legacy systems

- An insurance company creates a data hub that takes data from any source, interprets it, and makes it useful for any purpose

- A very large bank creates a single view of all relevant customer information serving multiple functions

- A big Pharma R&D organization amplifies its value with a dynamic, unified view vs. puddles of information

- A logistics company now has new-found agility to react to supply chain disruptions

- An energy trader combats fraud more effectively

- Intelligence agencies can better piece together and act on scraps of information to deliver actionable intelligence

There is an emergent pattern here: using a metadata-centric and semantically-aware platform to solve important complex data problems. I would offer that the future is already here — it’s just not very evenly distributed.

From Visionary To Mainstream

I’ve enjoyed watching how newer, “visionary” technologies go mainstream — and how others fail to cross the chasm, so to speak. The common thread I’ve noticed is simple: the successful ones solve a big, important problem that can’t easily be solved in other ways. Novelty can be interesting, but not always useful.

There appear to be many “complex data meets active metadata” platform problems to solve, with more cropping up every day. If you like, please visit our customer pages here.

There are great stories behind each and every one of them, some of which we can share. The lessons they’ve learned and the patterns they use are identifiable and repeatable.

For our best customers, visionary is the new normal.

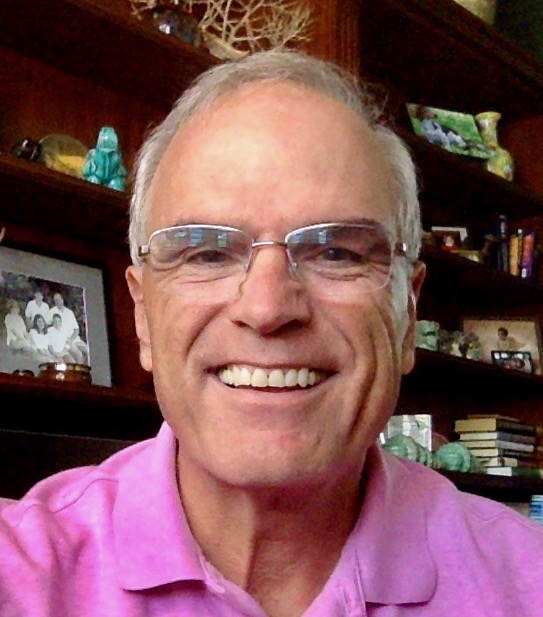

Chuck Hollis

Chuck joined the MarkLogic team in 2021, coming from Oracle as SVP Portfolio Management. Prior to Oracle, he was at VMware working on virtual storage. Chuck came to VMware after almost 20 years at EMC, working in a variety of field, product, and alliance leadership roles.

Chuck lives in Vero Beach, Florida with his wife and three dogs. He enjoys discussing the big ideas that are shaping the IT industry.

Comments

Topics

- Application Development

- Mobility

- Digital Experience

- Company and Community

- Data Platform

- Security and Compliance

- Infrastructure Management

Sitefinity Training and Certification Now Available.

Let our experts teach you how to use Sitefinity's best-in-class features to deliver compelling digital experiences.

Learn MoreMore From Progress

Latest Stories

in Your Inbox

Subscribe to get all the news, info and tutorials you need to build better business apps and sites

Progress collects the Personal Information set out in our Privacy Policy and the Supplemental Privacy notice for residents of California and other US States and uses it for the purposes stated in that policy.

You can also ask us not to share your Personal Information to third parties here: Do Not Sell or Share My Info

We see that you have already chosen to receive marketing materials from us. If you wish to change this at any time you may do so by clicking here.

Thank you for your continued interest in Progress. Based on either your previous activity on our websites or our ongoing relationship, we will keep you updated on our products, solutions, services, company news and events. If you decide that you want to be removed from our mailing lists at any time, you can change your contact preferences by clicking here.